"Data is the oil of the 21st century" is a statement you have probably heard many times.

Is your company ready to get the most out of its data?

.png?width=200&height=113&name=Estad%C3%ADsticas%20LP%20AWS%20Analytics%20(1).png)

.png?width=200&height=113&name=Estad%C3%ADsticas%20LP%20AWS%20Analytics%20(2).png)

.png?width=200&height=113&name=Estad%C3%ADsticas%20LP%20AWS%20Analytics%20(3).png)

The number of devices is expected to increase by 2030, which will increase the volume of data

Of companies use Big Data for competitive advantage

Of companies using 'doing more with less', increasing ROI through analytics solutions to gain competitive advantage

Why is an Analytics solution necessary?

Companies have an increasing amount of data at their disposal , coming from numerous information sources. However, in many cases they do not have the capacity to have an analytical environment capable of ingesting, modeling, analyzing and displaying the valuable information that lies behind this data.

In an increasingly competitive, global and changing environment like the one that surrounds us, the ability to make decisions quickly is essential, although if the initial information is not correct or up to date, the path we take will probably not be the right one.

The complexity of the data analysis architecture

We are all familiar with the architecture of the traditional analysis chain:

- Data Source, usually a relational database where our business information is stored.

- ETL (Extract - Transform - Load) solution, where data is extracted from the source, the necessary transformations are performed and, finally, it is loaded into a DB that we normally call Data Warehouse.

- Analysis and Visualization Solution to support decision-making based on the current picture, forecasts, etc.

At a conceptual level, there is no doubt that the model works, but the problem is that, when translated into practice, it is often complex to manage, as heterogeneous solutions are often chosen, which makes the implementation and life cycle management of the solution complex.

On the other hand, we have to analyze the costs of the solution. In a classic scheme, where some type of licensing is required (either for computational capacity assigned to the Data Ware or to the ETL solution), there are usually capital costs (CAPEX) that often make the project unfeasible.

It is precisely here where the application of the public cloud philosophy, especially the advantages of pay-per-use, becomes the axis on which the conception of the new data analysis architecture will pivot.

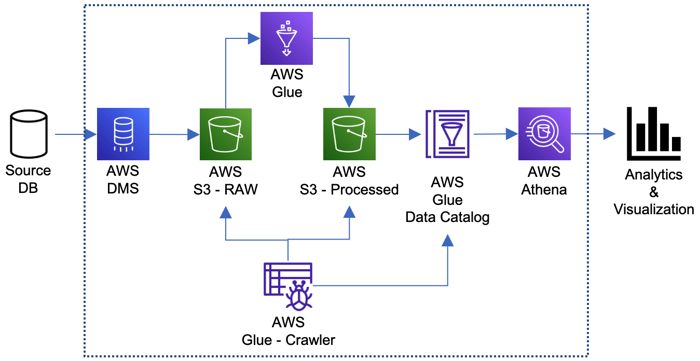

The new data analytics architecture with AWS Analytics

The scheme we start from is as follows:

.png?width=800&height=187&name=Recursos%20LP%20AWS%20Analytics-ENG%20(1).png)

As a premise we will assume that the extremes (Origin and Analysis / Visualization) are constant because they tend to be somewhat "imposed" elements:

- Origin: These will be the data sources in the company, such as ERP and/or CRM databases, external information sources, etc.

- Analysis / Visualization: In a multitude of occasions it usually happens that the end user is not willing to change their tools, the ones they use to analyze and visualize information and dashboards.

Because of this, the architecture we will present below will be independent of both the source and the analytics and visualization tool in question. That said, we will focus on the centerpiece, the engine of the new Amazon Analytics analytics architecture.

ETL + DataWarehouse on pay-per-use basis

As can be seen in the previous image, the components that facilitate the traditional functionality under pay-per-use premises are the following:

1. AWS DMS (Database Migration Service):

Service oriented to the migration and synchronization of databases and that, in this case, will be in charge of importing the data from the original databases to the AWS S3 service.

Regarding billing, the cost of this service depends on the computational capacity assigned to the information extraction task and the time it takes to complete the task, so that once the information import is completed, its cost becomes zero until the next data synchronization is performed.

2. AWS S3:

Object storage service, which will provide the functionality of the area where the data extracted from the source DBs are dumped, as well as the data processed by the AWS Glue service.

At the cost level, you will be billed for the amount of storage occupied and the number of writes and reads performed.

.png?width=200&height=200&name=2.%20Portfolio%20AWS%20-%20Almacenamiento%20(1).png)

3. AWS Glue:

Data integration service in charge of performing the necessary transformation tasks and, subsequently, updating the data and metadata of the data catalog, which will finally expose the data ready for consumption.

As any Serverless service , its billing will depend on the processing time of the imported data, as well as on the computational capacity needed to perform this task.

4. AWS Athena:

Amazon Web Services interactive query service. It is in charge of executing the queries coming from the Analytics and Visualization tools on the data processed and exposed in the AWS Glue data catalog.

As it is serverless, this service is billed according to the amount of information scanned in each query, so the more optimized the query is, the lower the cost of this service.

Benefits of AWS Analytics Solutions

We are firm believers that technology must be at the service of the business and must be elastic enough to offer the optimum performance at all times, avoiding being an obstacle.

Proposals such as this one, based on the pillars of:

- Cost optimization

- Pay-per-use

- Elasticity

- Serverless

They help us to know in advance that we are going to pay the right amount and that we are going to have the necessary performance at all times, forgetting investments in licensing third-party solutions that, many times, are linked to the number of users or to a computing capacity fixed in advance (usually the worst case, the peak case).

Why Neteris

At Neteris we help you evaluate your business needs and translate them into IT language, choosing the solution or service that best meets the requirements of each company. Therefore, do not hesitate to contact us to study your case and analyze the best way to meet your business requirements.

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

Propuesta de valor

Propuesta de valor